A SIMD computer

What is this about ?

This is a highly-parallel architecture computer - to the point of being a single-bit processor. Naturally, wider-width operations can be built up from iterated single-bit ones.

A single-bit add generates a single bit carry, that is used for the next bit. But wide words take longer to process.

This architecture can perform like fully associative memory which has only specialised chips that use it - it must just be done at one cycle per bit.

Why is this important ?

The CPU is so small it can live in the RAM chip itself. This unlocks the bus bandwidth issue that has spoiled Moore’s law progression of CPU speeds that we seem to be falling behind.

Why is parallel computing important ?

We are approaching many fundamental limits when it comes to fabrication of chips and the boards they are attached to - the speed of light has limited coherent clocked systems on circuit boards for more than a decade.

Processor speeds have remained static at about 1 to 3 Ghz for years.

Bus sizes have expanded from 4 bits all the way up to 64 bits - wider if they stay on-chip, like Intel x86’s MMX, SSE and AVX extensions that can go to 512 bits.

Making buses wider becomes unwieldy, and consumes a lot of power. Power is a critical factor in supercomputers - often they are ranked by that single metric.

This architecture allows numbers like 64k bit bus widths - without the power penalty.

These huge advantages require us to properly research it to see if algorithm advances can make use of in-situ memory computation.

Advances in processor throughput have come from multi-core architectures, and software that is able to use it - this has required change of algorithms.

Computation-limited scientific computing has moved to GPUs - the benefit of multiple cores and on-chip buses outweighing the painful adjustment of computer algorithms to fit the multi-core GPU processors.

What is SIMD ?

SIMD is an acronym for Single Iinstruction Multiple Data - the idea of a single instruction stream controlling many computers with their own slice of the data. It is like an orchestra conductor giving the same beat information to many musicians. MMX, SSE and AVX, and the GPU chips are all SIMD.

A more flexible approach is MIMD - separately-threaded processors working with their own little programs. An example of this is the Cell processor by IBM, used in the Sony Playstation 3, or the Transputer, a British microprocessor from the 1980s.

The problem is how to program the machine. During the life of computers, we have been steered by what they do - sequential programming. The first microprocessor was a 4 bit computer, with a sequential series of instructions - if tests and while loops. Little has changed - things have got faster, and wider - pipelining has introduced a limited level of parallelism.

But we must change this mindset, and think about how to use multiple processors at the algorithm stage, rather than bolting limited parallelism onto a sequential program.

This SIMD processor is an architecture to allow exploration of algorithms that use plentiful distributed, relatively slow, computing to solve a certain class of problems.

Nothing about sequential computing is going away. But now computing is cheaper than the data busses carrying data between chips. What can benefit from this decoupling of dependencies ?

What is a good communications infrastructure for these parallel CPUs ?

Implementation

There are two distinct units to the SIMD computer -

- A Forth Sequencer, to feed instructions to the CPUs

- The SIMD engine - RAM blocks with bit-serial CPUs on every data line, with instructions and RAM addresses being fed from the sequencer.

There is also an SPI interface for loading and reading of the data array. For data I/O, a Raspberry Pi is used, via SPI busses. This will be a definite bottleneck in the system - but we start small.

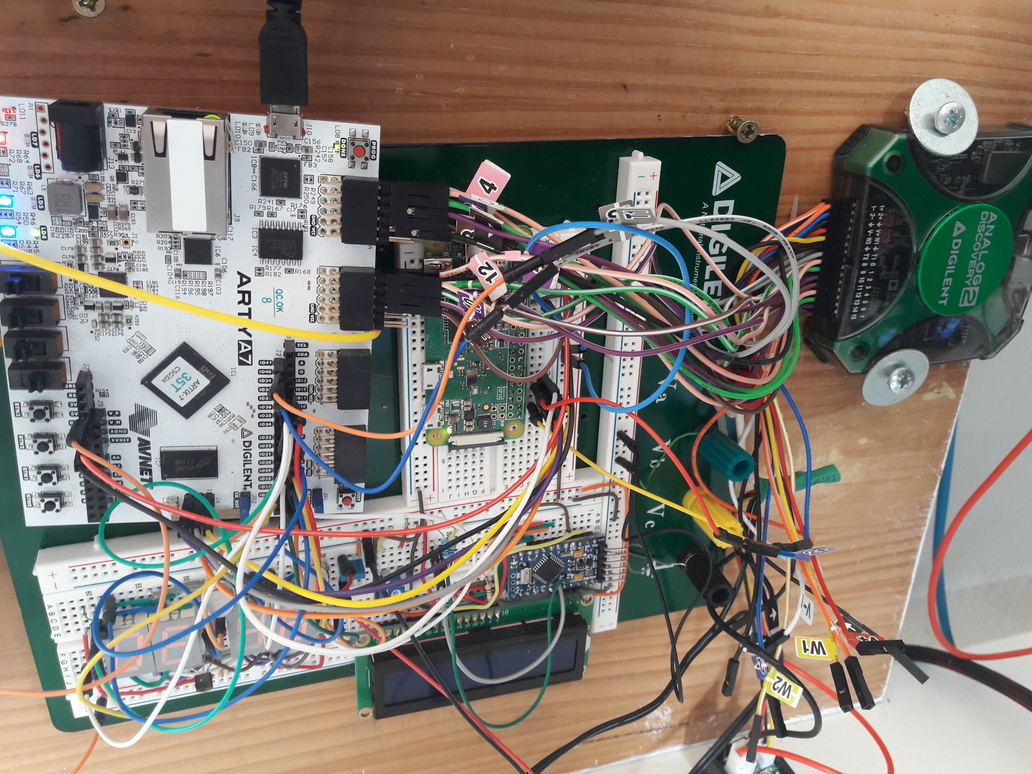

These are all, besides the Rpi, implemented inside a single Artix7 FPGA.

Why has this not been done before ?

There have been a few attempts at fully-associative computers, but I have not seen anything with this bit-serial CPU.

One of the main problems is programming the machines - it requires a different approach to the algorithms.

There are also some steep hurdles to this architecture, I/O bandwidth being the most pressing, long term.